Similar to the vanishing gradient problem, the issue of exploding gradients arises during backpropagation. In this chain-reaction-like scenario, gradients become excessively large, causing model weights to grow uncontrollably. This instability often leads to numerical overflow, which results in a ‘Not a Number’ (NaN) error.

Spotting the Exploding Gradient Problem

Exploding gradients are a tell-tale sign of an unstable training process. You can detect them in a few key ways:

- Erratic Loss and Accuracy Curves: During training, the loss and accuracy curves should generally show a smooth, downward trend (for loss) or an upward trend (for accuracy). If you see sudden, erratic, or spiky fluctuations in these curves, it’s a strong indicator that the gradients are becoming excessively large, causing the optimizer to “overshoot” the optimal solution.

- NaN or Infinite Values: The most severe symptom is when the model’s weights or the loss function suddenly become

NaN(Not a Number) orInf(Infinity). This happens when the gradients become so large that they exceed the numerical limits of the computer’s floating-point precision, essentially “breaking” the training process. Once this occurs, the model is no longer trainable.

A Note on the ReLU Activation Function

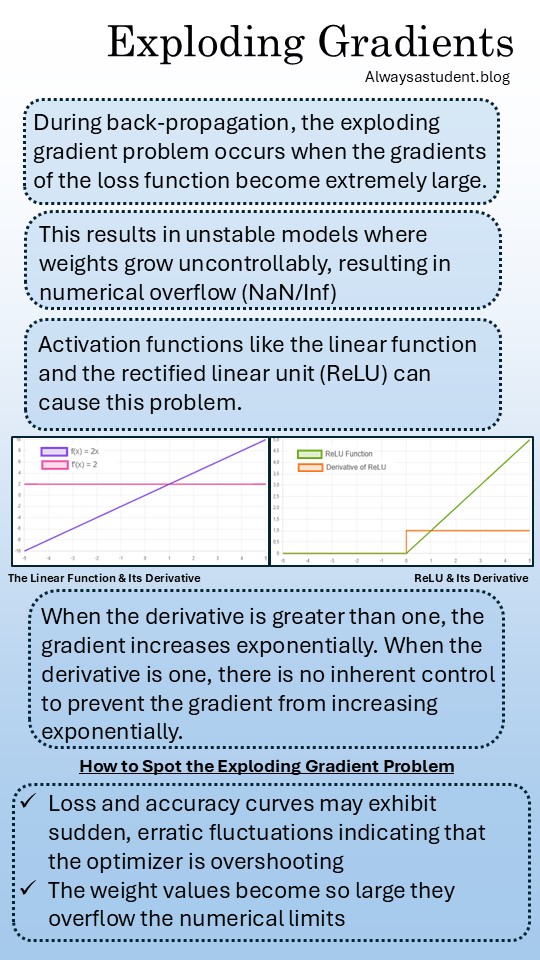

While using the Rectified Linear Unit (ReLU) activation function is a common and effective way to address the vanishing gradient problem, it can also introduce its own set of issues. ReLU’s derivative is a constant value of 1 for all positive inputs. This simple, non-saturating nature is what prevents the gradients from shrinking to zero during backpropagation, but it also means there is nothing to control or “dampen” the gradient if it starts to grow too large.

Essentially, by solving one problem, ReLU opens the door to the other. Because the gradient signal is not scaled down for large positive values, a series of layers with large gradients can easily multiply, leading to the exploding gradient problem.

One response to “The Exploding Gradient Problem”

[…] resolves the exploding gradient problem by dampening large parameter growth. This is achieved by re-scaling the activations to unit […]

LikeLike