The perceptron algorithm together with the Heaviside function can only be used for binary classification. In order to use the algorithm for other types of classifications, there are several other functions which can be used. Non-linear activation functions can be used in order to separate data that is not linearly seperable.

Popular activation functions:

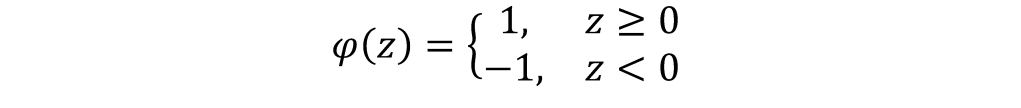

- Sign Function

- Useful for binary classification when the data needs to be separated into positives and negatives

- Mathematical definition:

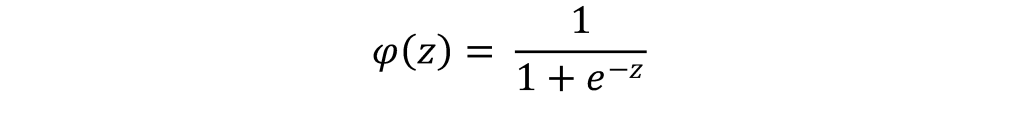

- Sigmoid Function

- Also know as the logistic function

- smooth function between 0 and 1

- Useful for inputs that need to be mapped to probabilites

- Mathematical defintion:

- ReLU (Rectified Linear Unit) Function

- Introduces non-linearity

- Useful for regression

- Should only be used in the Hidden Layers

- Takes care of the vanishing gradient issue

- Mathematical definition:

One response to “Activation Functions”

[…] ReLU is a non-linear function that clips all negative inputs to zero. This results in a non-zero, positive mean and a reduction in the signal’s overall magnitude. When we apply Xavier initialization to deep networks using ReLU, it leads to gradient problems. […]

LikeLike