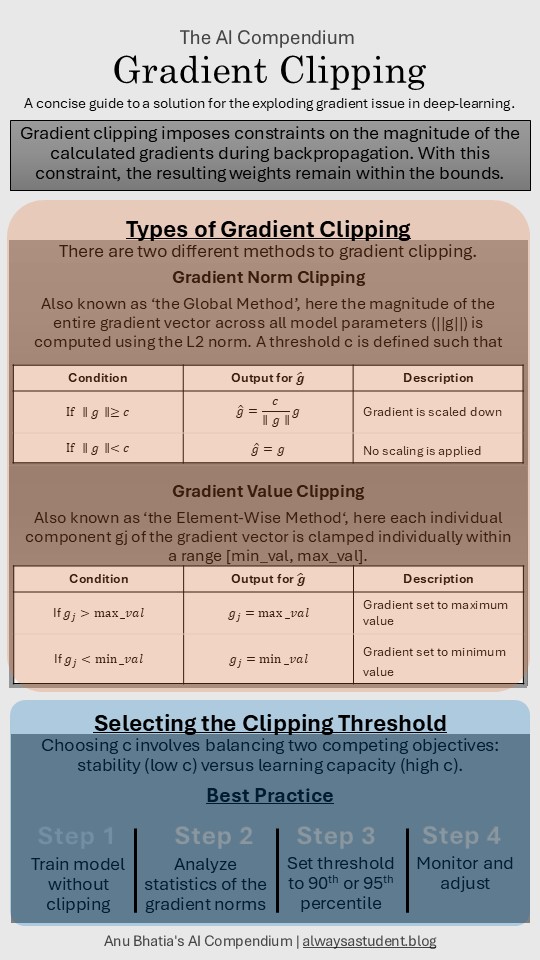

Gradient clipping prevents exploding gradient problems by limiting on how large the adjustments to the neural network‘s weights can be during a single update.

It imposes constraints on the magnitude of the calculated gradients during backpropagation, ensures that the resulting weight updates remain withing safe and manageable bounds, leading to stable and efficient training dynamics.

There are two different methods of gradient clipping:

- Gradient Norm Clipping

- Also known as ‘The Global Method’

- Preferred technique for large and complex neural networks

- Regulates the magnitude of the entire gradient vector across all model parameters

- Directional integrity is preserved

- Gradient Value Clipping

- Also known as ‘The Element-Wise Method’

- useful for preventing outliers in specific layers like deep convoluted neural networks (CNNs)

- Each individual component of the gradient vector is clamped individually withing a predefined symmetrical range [min_val, max_val]

- Direction of the gradient vector is distorted

Gradient clipping is implemented in the standard training workflow by placing it between gradient computation and parameter update. The standard sequence is:

- Compute loss and run the forward pass

- Compute gradients

- Apply gradient clipping

- Update model parameters.