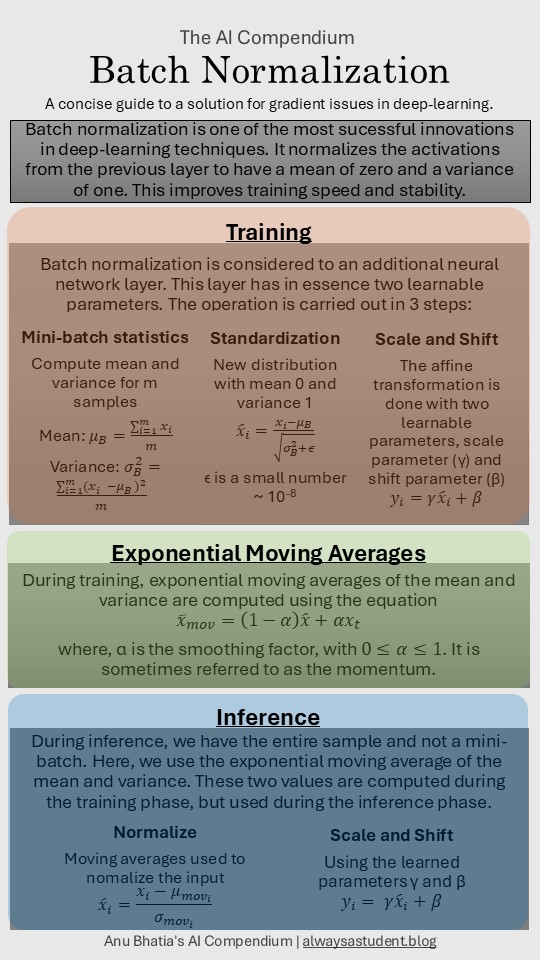

Batch normalization is a method of reparametrizing any layer, input or hidden, in a deep neural network.

Batch Normalization, or BN, resolves the vanishing gradient problem by ensuring that activations remain in the non-saturated regions of non-linear functions. This is achieved by forcing the inputs to have zero mean and unit variance.

It resolves the exploding gradient problem by dampening large parameter growth. This is achieved by re-scaling the activations to unit variance before applying non-linearity.

Further Reading:

Sergey Ioffe, Christian Szegedy. Batch Normalization: Accelerating Deep Network Training by Reducing Internal Covariate Shift. Link

Shibani Santurkar, Dimitris Tsipras, Andrew Ilyas, Aleksander Madry. How Does Batch Normalization Help Optimization? Link

Ketan Doshi. Batch Norm Explained Visually – How it works, and why neural networks need it. Link.

Ian Goodfellow, Yoshua Bengio, & Aaron Courville (2016). Deep Learning. MIT Press. Link