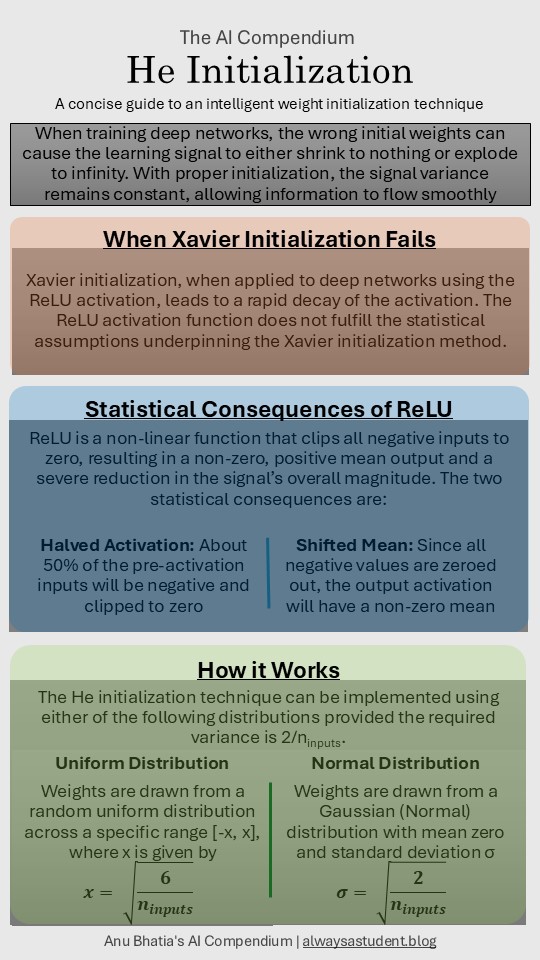

Also known as Kaiming Initialization, this technique was developed for neural networks that used the ReLU activation function.

ReLU is a non-linear function that clips all negative inputs to zero. This results in a non-zero, positive mean and a reduction in the signal’s overall magnitude. When we apply Xavier initialization to deep networks using ReLU, it leads to gradient problems.

The He initialization technique solves the variance instability issue for deep neural networks by adjusting the variance of the weight to compensate for the signal suppression that is inherent to the ReLU activation function. It does this by calculating the weight variance as a function of only the number of inputs and the corrective factor of 2.

Further Reading:

K. He, X. Zhang, S, Ren and J. Sun. Delving Deep into Rectifiers: Surpassing Human-Level Performance on ImageNet Classification. https://arxiv.org/abs/1502.01852

Kaiming Initialization in Deep Learning. Geeks for geeks. https://www.geeksforgeeks.org/deep-learning/kaiming-initialization-in-deep-learning/

Kaiming He Initialization in Neural Networks – Math Proof. Ester Hlav. https://towardsdatascience.com/kaiming-he-initialization-in-neural-networks-math-proof-73b9a0d845c4/