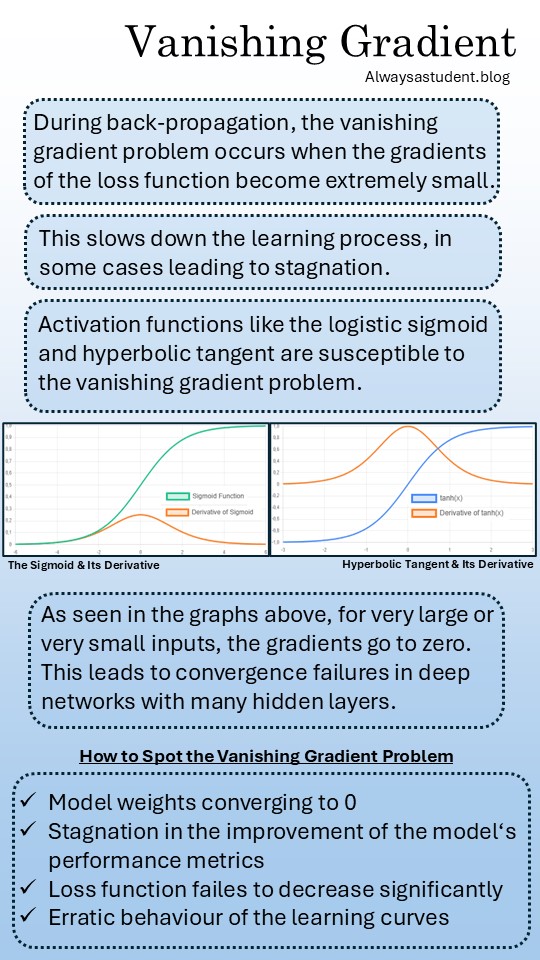

The vanishing gradient problem can plague deep neural networks which consists of many hidden layers. It generally occurs when the derivatives of the activation functions are less than one. This leads to the multiplication of small numbers during back-propagation, which in turn shrink the gradients exponentially.

As a result of this issue, the network will take a long time to converge or may not converge at all. This slows down the learning process.

Spotting the Vanishing Gradient Problem

Monitoring the training dynamics of a neural network makes it to easy to look for this issue. Here are some ways to spot the vanishing gradient problem:

- Observe if the model weights converge to zero: A key sign is when the weights of your neural network layers fail to update and instead converge toward zero. This happens because the gradients, which are used to update the weights, become so tiny that they don’t have a meaningful effect.

- Stagnant performance: If your model’s performance stops improving, it could be a sign of vanishing gradients. The network isn’t learning from the data, so its accuracy, precision, or other metrics plateau.

- Loss function plateaus: The loss function is a measure of how wrong your model’s predictions are. When the loss stops decreasing significantly during training, it indicates that the network is no longer learning.

2 responses to “The Vanishing Gradient Problem”

[…] to the vanishing gradient problem, the issue of exploding gradients arises during backpropagation. In this chain-reaction-like […]

LikeLike

[…] Normalization, or BN, resolves the vanishing gradient problem by ensuring that activations remain in the non-saturated regions of non-linear functions. This is […]

LikeLike