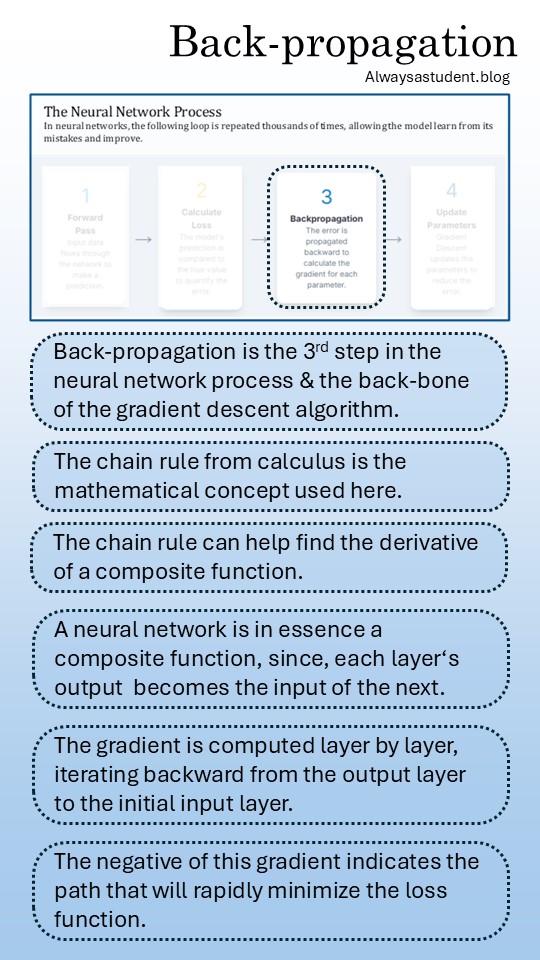

Back-propagation is a computational method that computes the gradient of the loss function in neural networks. This is the third step in the neural network process.

The mathematical concept used here is the chain rule from calculus. The chain rule helps in finding the derivative of a composite function, and a neural network can be considered as a giant composite function.

Back-propagation is a very efficient method to compute the gradients of the loss-function because it avoids redundant calculations of intermediate terms. The gradient is computed layer by layer, starting at the output layer all the way up to the input layer.

3 responses to “Back-propagation”

[…] activation functions are less than one. This leads to the multiplication of small numbers during back-propagation, which in turn shrink the gradients […]

LikeLike

[…] to the vanishing gradient problem, the issue of exploding gradients arises during backpropagation. In this chain-reaction-like scenario, gradients become excessively large, causing model weights to […]

LikeLike

[…] Back-propagation calculates the gradient of the loss function with respect to the weights and updates the weights to reduce the error. The main mathematical principle used here is the chain rule. However, the repeated multiplication inherent in this process can lead to either the vanishing or the exploding gradient problem. […]

LikeLike