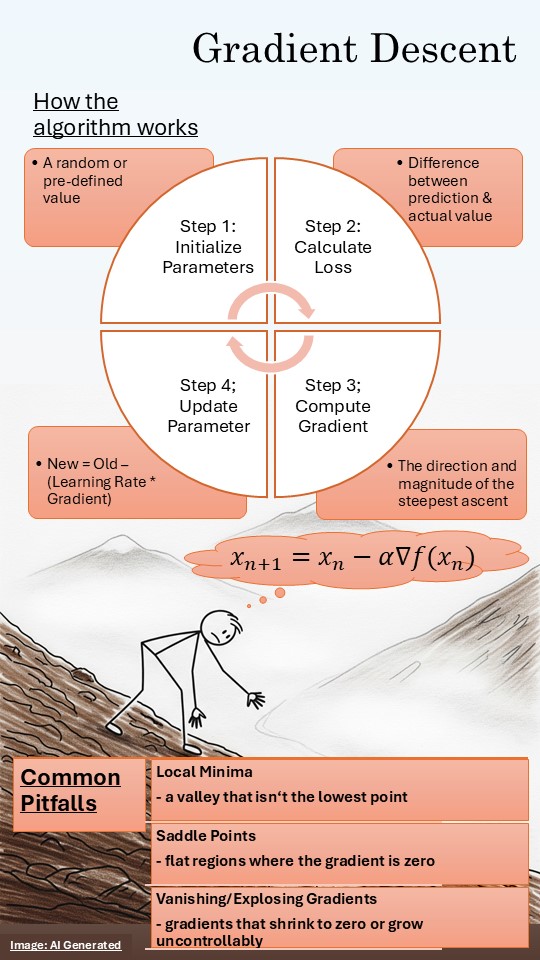

- Gradient descent is an optimization algorithm used to minimize the cost function in machine learning models.

- The goal is to find the model parameters that minimizes this function, thereby improving the model’s accuracy.

- More often the analogy of hiking down a hill or mountain is used to explain the method

- The algorithm iteratively adjusts parameters by moving in the opposite direction to the function’s gradient