The perceptron algorithm is a basic artificial neuron and is considered to be a building block of neural networks. It takes inputs, applies weights and produces output using an activation function. A supervised learning algorithm, it is a binary classifier, separating data points using a linear combination of the input variables.

The algorithm consists of

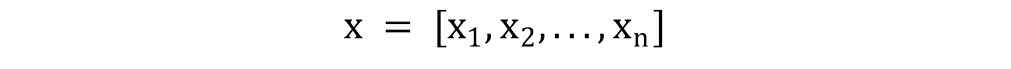

- an input vector of the form

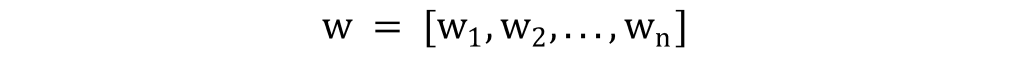

- a weight vector

- a bias denoted as b

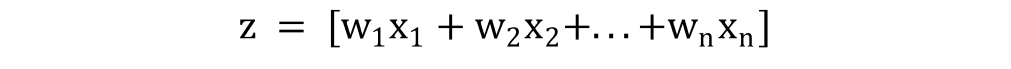

- the summation function z

- activation function

The mathematical concepts needed in order to understand the perceptron algorithm are:

- definition of independent variables

- what a linear equation is

- vectors

- dot product

- heaviside step function

NOTE: The perceptron algorithm can classify OR and AND functions but not XOR as it is not linearly separable.